News

Pentagon Plans to Spend $2 Billion on AI Powered Weapons

The Department of Defense plans to spend $2 billion over the next five years to research implementing artificial intelligence (AI) into weapons programs. The research arm of the DoD, known as Defense Advanced Research Projects Agency, or DARPA, will begin research as a means to better compete with advanced Russian and Chinese military forces.

The budget for this program is relatively small compared to other programs. Current programs developing next-generation fighter jets are projected to reach nearly a trillion dollars once it concludes. The Manhattan Project that produced nuclear weapons in the 1940s reached nearly $28 billion due to inflation.

AI research is no stranger to military application. In addition to DARPA, military contractor Booz Allen received an $885 million contract to begin working on confidential AI programs for the U.S. military over the next five years as well. Project Maven, another highly controversial military AI project, is slated to receive $93 million next year. Google was the previous leader of the initiative, but later announced it would be discontinuing its involvement after employees protested the company’s participation.

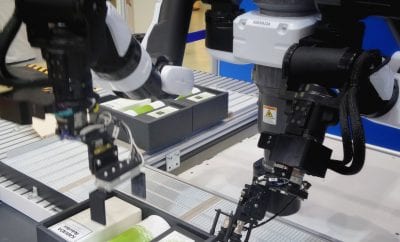

Specific details regarding the research are pending, but a Pentagon strategy document released last month states that it will eventually lead to more powerful and deadly autonomous weapons that can “search for, identify, track, select, and engage targets independent of a human operators input.”

Weapons systems already exist that allow for such things, but military officials have been hesitant to fully surrender control to AI due to lack of confidence in its ability to carry out such actions while on the battlefield.

Ron Brachman, manager of DARPA’s AI programs for three years said, “We probably need some gigantic Manhattan Project to create an AI system that has the competence of a three-year-old.” However, with this massive investment in AI research for weapons, we just might get that and more.

Michael Horowitz worked on AI issues for the Pentagon in 2013, and in an interview, he warned, “There’s a lot of concern about AI safety – [about] algorithms that are unable to adapt to complex reality and thus malfunction in unpredictable ways. It’s one thing if what you’re talking about is a Google search, but it’s another thing if what you’re talking about is a weapons system.”

0 comments